Kubernetes, or k8s for short, is an open source platform, pioneered by Google, which started as a simple container orchestration tool but has grown into a cloud-native platform. It’s one of the most significant advancements in IT since the public cloud came to being in 2009 and has an unparalleled 5-year 30% growth rate in both market revenue and overall adoption.

We asked Devs, DevOps and businesses to tell us how they are using Kubernetes, and it is a fascinating read.

Kubernetes is popular for its appealing architecture, a large and active community and the continuous need for extensibility that enables countless development teams to deliver and maintain software at scale by automating container orchestration.

Kubernetes maps out how applications should work and interact with other applications. Due to its elasticity, it can scale services up and down as required, perform rolling updates, switch traffic between different versions of your applications to test features or rollback problematic deployments.

Kubernetes has emerged as a leading choice for organisations looking to build their multi-cloud environments. All public clouds have adopted Kubernetes and offer their own distributions, such as AWS Elastic Container Service for Kubernetes, Google Kubernetes Engine and Azure Kubernetes Service.

What are containers?

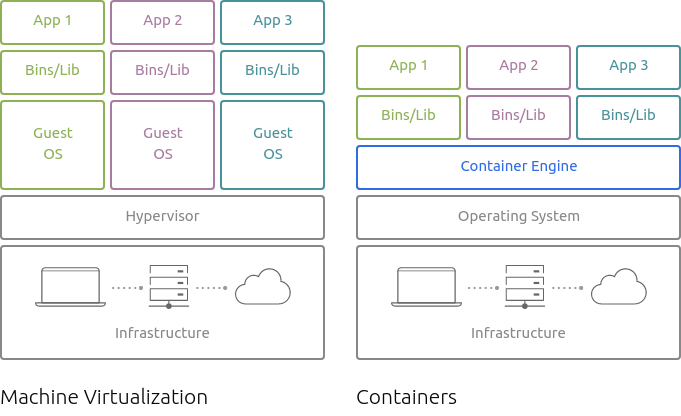

Containers are a technology that allows the user to divide up a machine so that it can run more than one application (in the case of process containers) or operating system instance (in the case of system containers) on the same kernel and hardware while maintaining isolation among the workloads. Containers are a modern way to virtualise infrastructure, more lightweight approach than traditional virtual machines: all containers in single host OS share the kernel and other resources, require less memory space, ensure greater resource utilisation and shorter startup times by several orders of magnitude.

Inside Google alone, at least two billion containers are generated each week to manage its vast operations.

Kubernetes history and ecosystem

Kubernetes (from the Greek ‘κυβερνήτης’ meaning ‘helmsman’) was originally developed by Google, Kubernete’s design has been heavily influenced by Google’s ‘Borg’ project – a similar system used by Google to run much of its infrastructure. Kubernetes has since been donated to the Cloud Native Computing Foundation, a collaborative project between the Linux Foundation and Google, Cisco, IBM, Docker, Microsoft, AWS and VMware.

Did you know? The font used in the Kubernetes logo is the Ubuntu font!

How does Kubernetes work?

Kubernetes works by joining a group of physical or virtual host machines referred to as “nodes”, into a cluster to manage containers. This creates a “supercomputer” that has greater processing speed, more storage capacity and increased network capabilities than any single machine would have on its own. The nodes include all necessary services to run “pods”, which in turn run single or multiple containers. A pod corresponds to a single instance of an application in Kubernetes.

One (or more for larger clusters, or High Availability) node of the cluster is designated as the “control plane”. The control plane node then assumes responsibility for the cluster as the orchestration layer – scheduling and allocating tasks to the other “worker” nodes in a way which maximises the resources of the cluster. All operator interaction with the cluster goes through this main node, whether that is changes to the configuration, executing or terminating workloads, or controlling ingress and egress on the network.

The control plane is also responsible for monitoring all aspects of the cluster, enabling it to perform additional useful functions such as automatically reallocating workloads in case of failure, scaling up tasks which need more resources and otherwise ensuring that the assigned workloads are always operating correctly.

Speaking Kubernetes

- ClusterA set of nodes that run containerized applications managed by Kubernetes.

- PodThe smallest unit in the Kubernetes object model that is used to host containers.

- Control plane nodeThe orchestration layer that provides interfaces to define, deploy, and manage the lifecycle of containers.

- Worker nodeEvery worker node can host applications as containers. A Kubernetes cluster usually has multiple worker nodes (at least one).

- API serverThe primary control plane component, that exposes the Kubernetes API, enabling communications between cluster components.

- Controller-managerA control plane daemon that monitors the state of the cluster and makes all necessary changes for the cluster to reach its desired state.

- Container runtimeThe software responsible for running containers by coordinating the use of system resources across containers.

- KubeletAn agent that runs on each worker node in the cluster and ensures that containers are running in a pod.

- KubectlA command-line tool for controlling Kubernetes clusters.

- KubeproxyEnables communication between worker nodes, by maintaining network rules on the nodes.

- CNIThe Container Network Interface is a specification and a set of tools to define networking interfaces between network providers and Kubernetes.

- CSIThe Container Storage Interface is a specification for data storage tools and applications to integrate with Kubernetes clusters.

Why use Kubernetes?

Kubernetes is a platform to run your applications and services. It’s cloud-native, provides operational cost savings, faster time-to-market and is maintained by a large community. Developers like container-based development, as it helps break up monolithic applications into more maintainable microservices. Kubernetes allows their work to move seamlessly from development to production and results in faster-time-to-market for a businesses’ applications.

Kubernetes works by:

- Orchestrating containers across multiple hosts

- Ensures that containerised apps behave in the same way in all environments, from testing to production

- Controlling and automating application deployments and updates

- Making more efficient use of hardware to minimise resources needed to run containerised applications

- Mounting and adding storage to run stateful apps

- Scaling containerised applications and their resources on the fly

- Declaratively managing services, which guarantees that applications are always running as intended

- Health-checking and self-healing applications with auto-placement, autorestart, auto-replication and autoscaling

- Being open source (all Kubernetes code is on GitHub) and maintained by a large, active community

What Kubernetes is not

Kubernetes is a platform that enables configuration, automation and management capabilities around containers. It has a vast tooling ecosystem and can address complex use cases, and this is the reason why many confuse it for a traditional Platform-as-a-Service (PaaS).

It is important to distinguish between the two solutions. Kubernetes, as opposed to PaaS does not:

- Limit the types of supported applications or require a dependency handling framework

- Require applications to be written in a specific programming language nor does it dictate a specific configuration language/system.

- Deploy source code and does not build applications, although it can be used to build CI/CD pipelines

- Provide application-level services, such as middleware, databases and storage clusters out-of-the box. Such components can be integrated with k8s through add-ons.

- Provide nor dictate specific logging, monitoring and alerting components

source : https://ubuntu.com/kubernetes/what-is-kubernetes